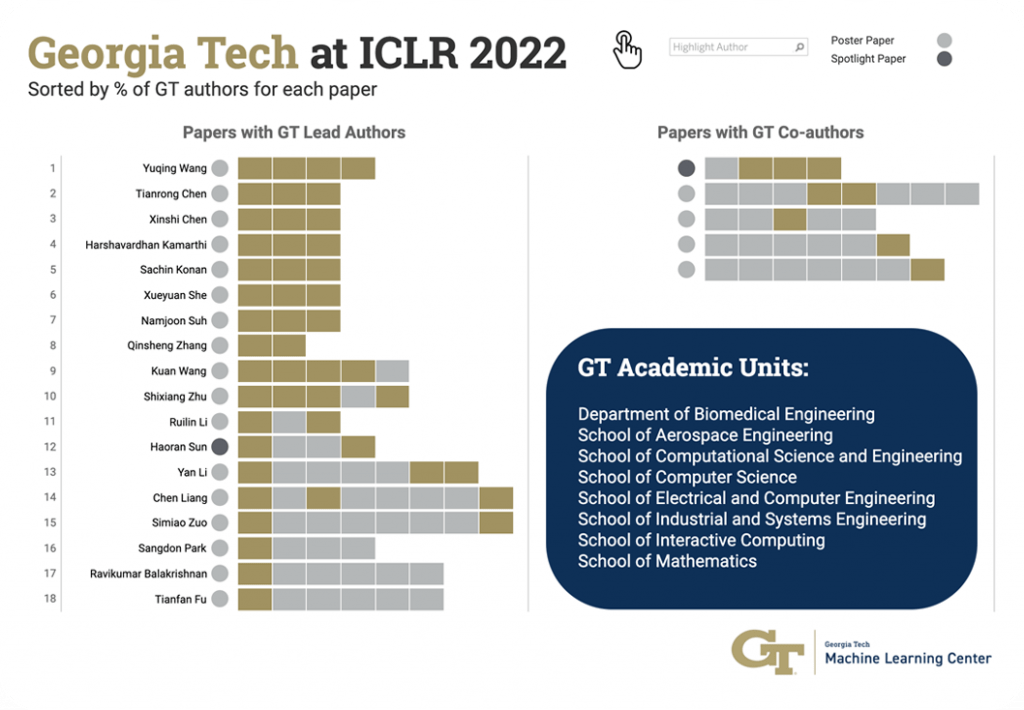

Georgia Tech at ICLR 2022

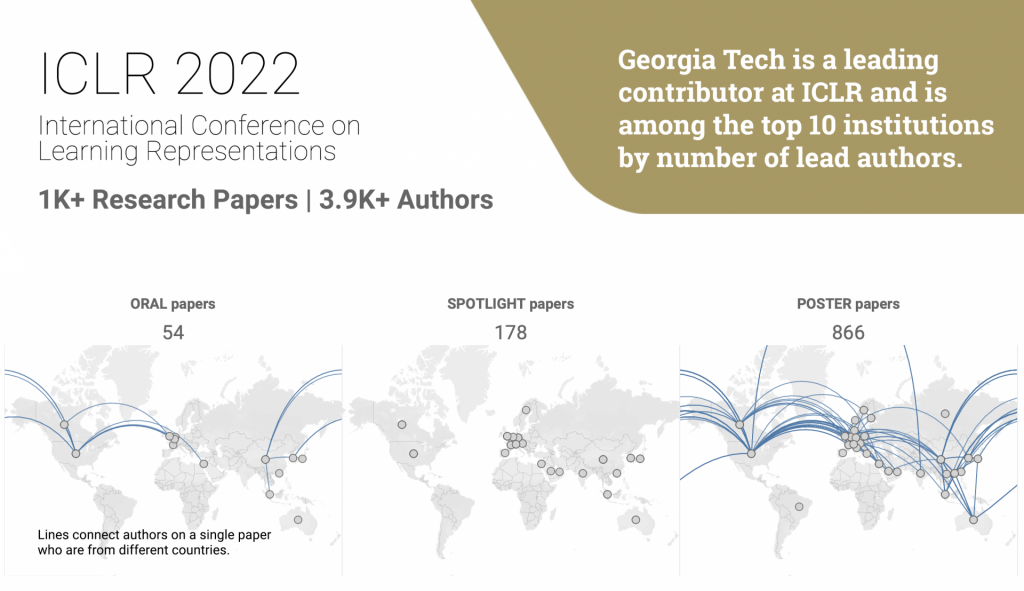

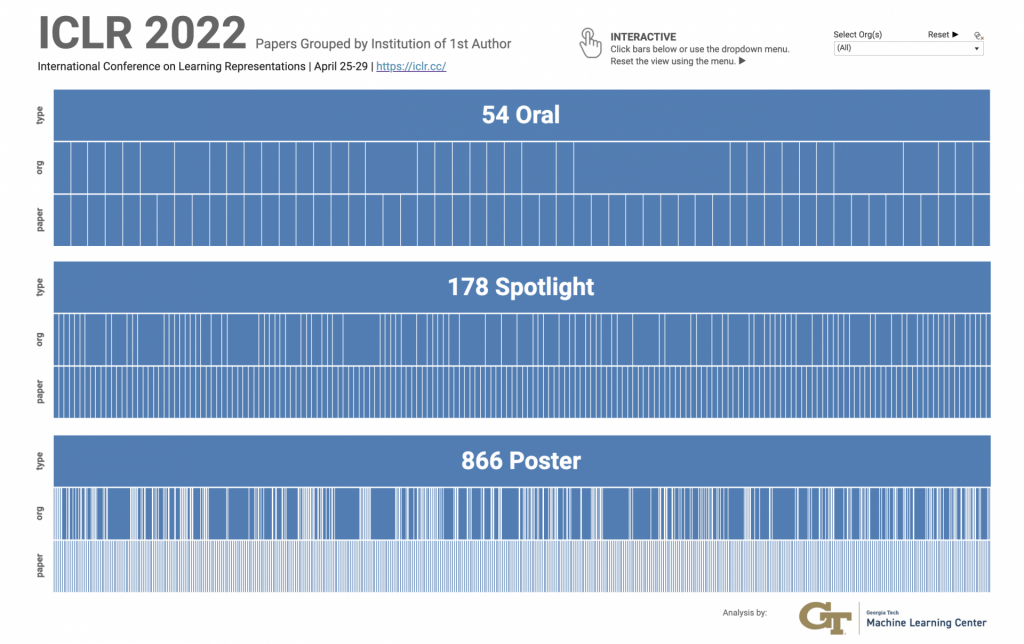

The International Conference on Learning Representations (ICLR), taking place April 25-29, is globally renowned for presenting and publishing cutting-edge research on all aspects of deep learning used in the fields of artificial intelligence, statistics and data science, as well as important application areas such as machine vision, computational biology, speech recognition, text understanding, gaming, and robotics.

Explore our interactive virtual experience of Georgia Tech research at ICLR and see where the future of deep learning leads.